In Scene 2 ("Embers"), our protagonist Mira reads a fantastical fable about her family heritage, written by her enigmatic grandmother Miranda. Emily and I wanted to make a scene where the user strikes virtual matches as a mechanic to progress the story, and "Embers" was a perfect stylistic fit for this interaction. This scene is by far our most technically challenging to implement, because of the audiovisual cycles and interactions involved.

SCENE CONCEPT

You are alone in a completely dark VR space. A glowing 3D matchbox appears in front of you and seven 3D matches fall out. You can pick them up, one by one, with your controller, and strike them by swinging them through the air, with an animated 3D flame appearing. When you hold the matches out in front of you, they illuminate animated cursive writing, accompanied by a voiceover, which tells a section of the story. The animation of the flame changes 2-3 times during the voiceover. The full story is told through a sequence of 7 matches.

SCENE ARCHITECTURE

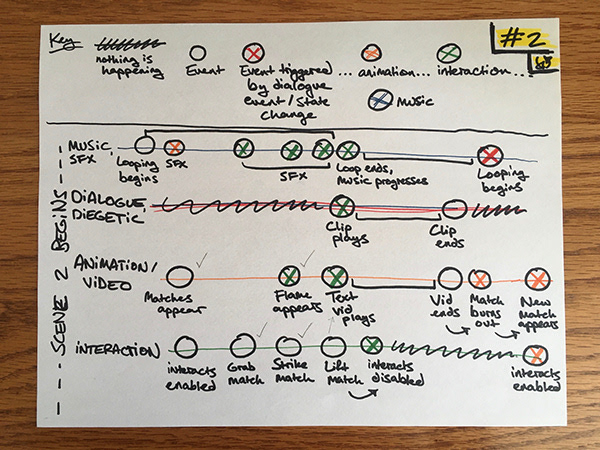

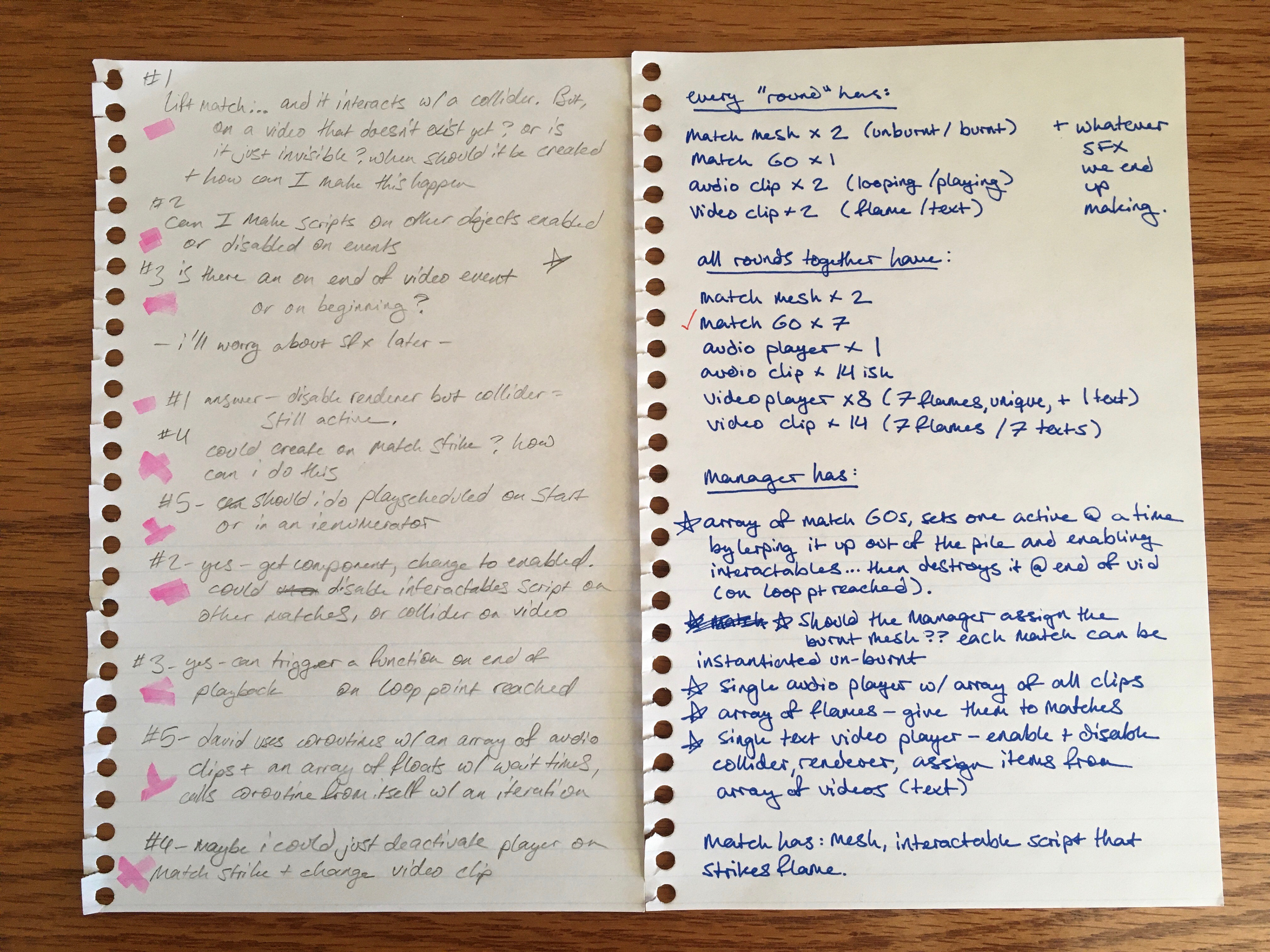

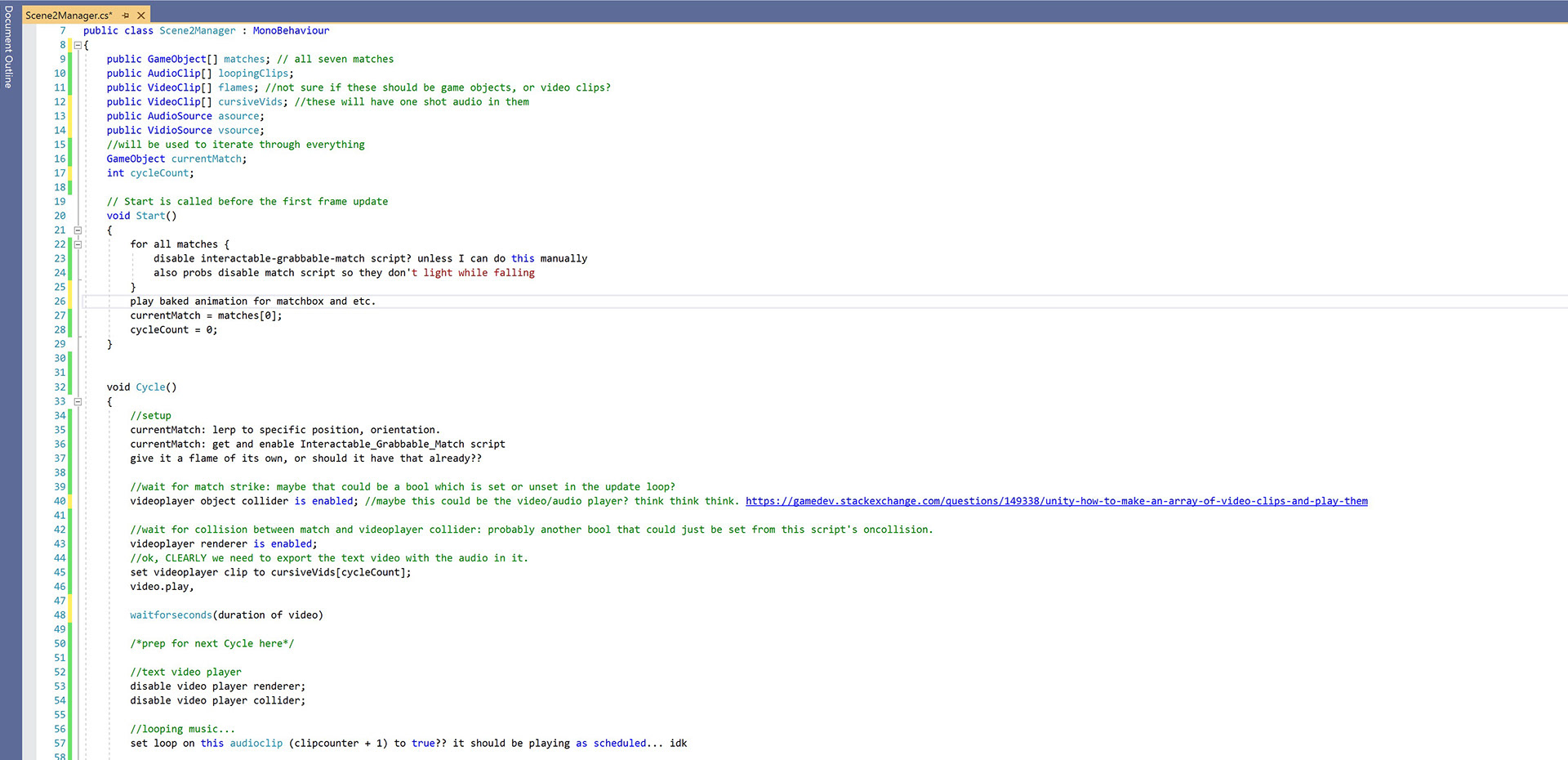

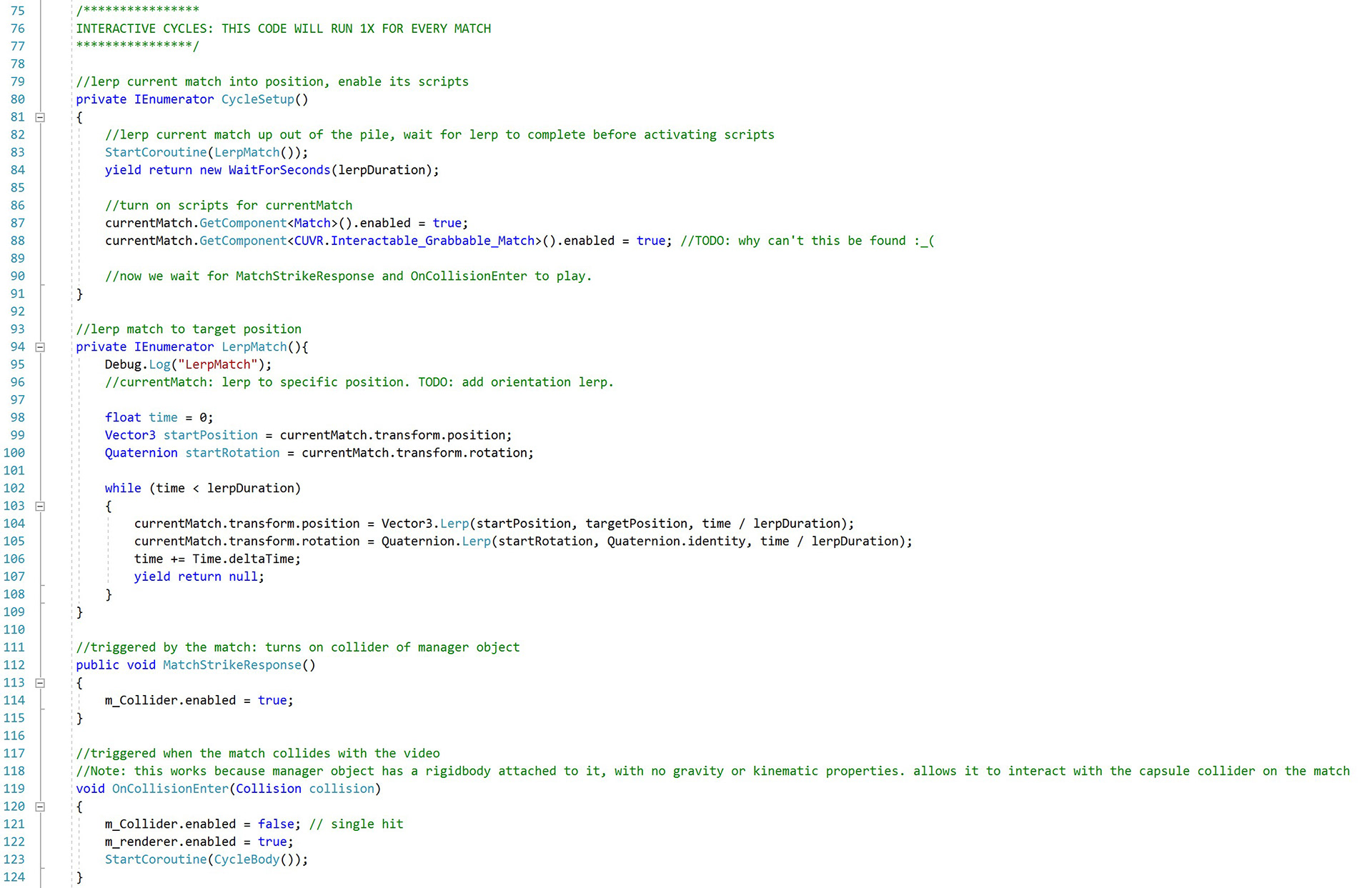

Conceptually, this scene was very difficult for me to wrap my head around, because there are so many events that chain together. Seven matches, seven looping audio clips, seven cursive videos, seven voiceover clips, and 28+ animated flame videos/states all have to turn on and off in exactly the right positions and at exactly the right times, triggered by user interactions and each other. Ultimately, I decided to handle all of this by creating a manager script which would coordinate between all of the different matches, activating and de-activating their scripts and prompting them to run functions and coroutines. It then made the most sense to place this manager script on the gameobject which would play the cursive videos.

Planning and pseudocode:

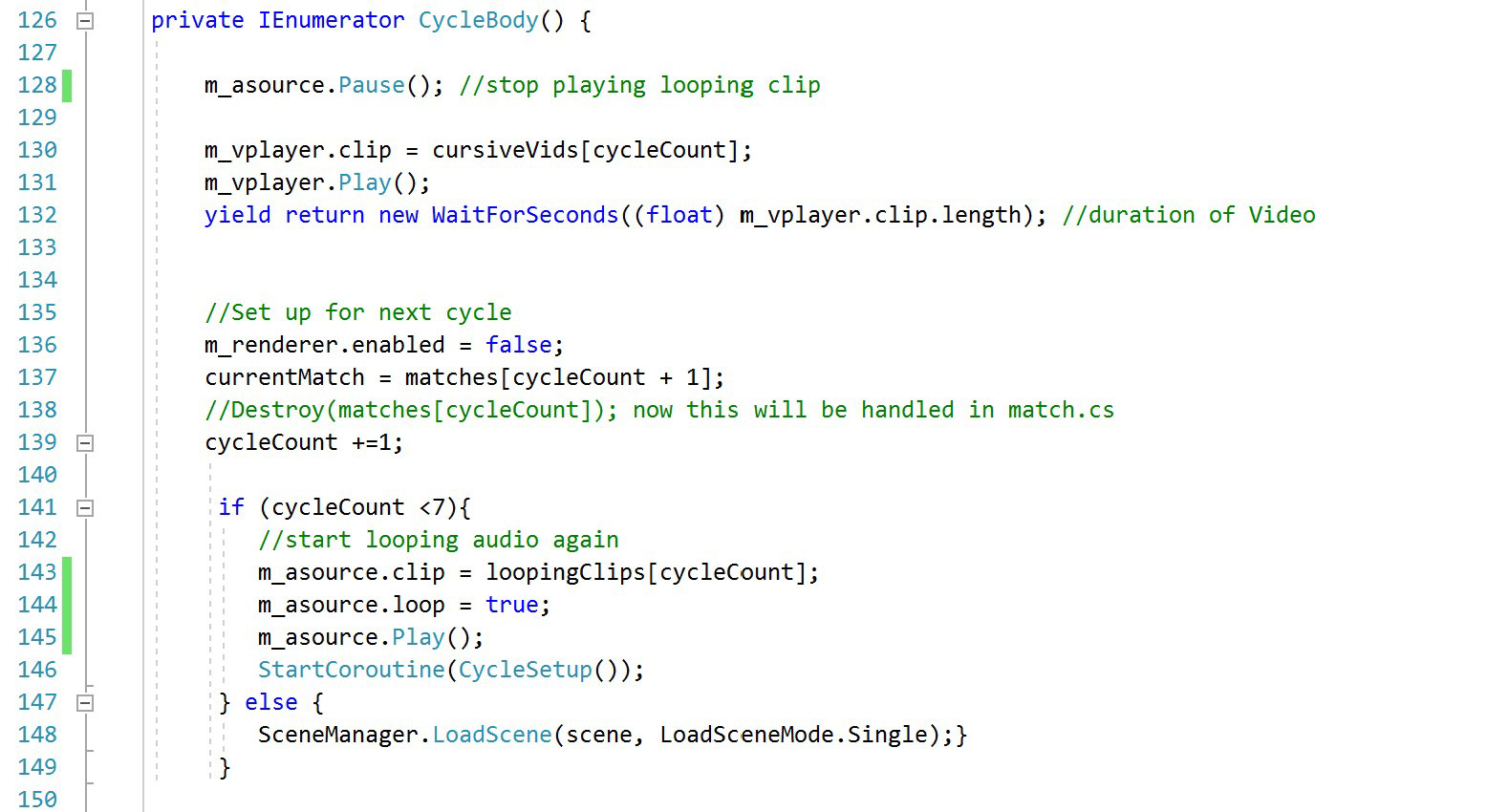

Final script: A cycle of coroutines, and functions triggered by other interactive events, that runs once for every match.

MATCHES

Emily created the matches as simple 3D geometries in Blender.

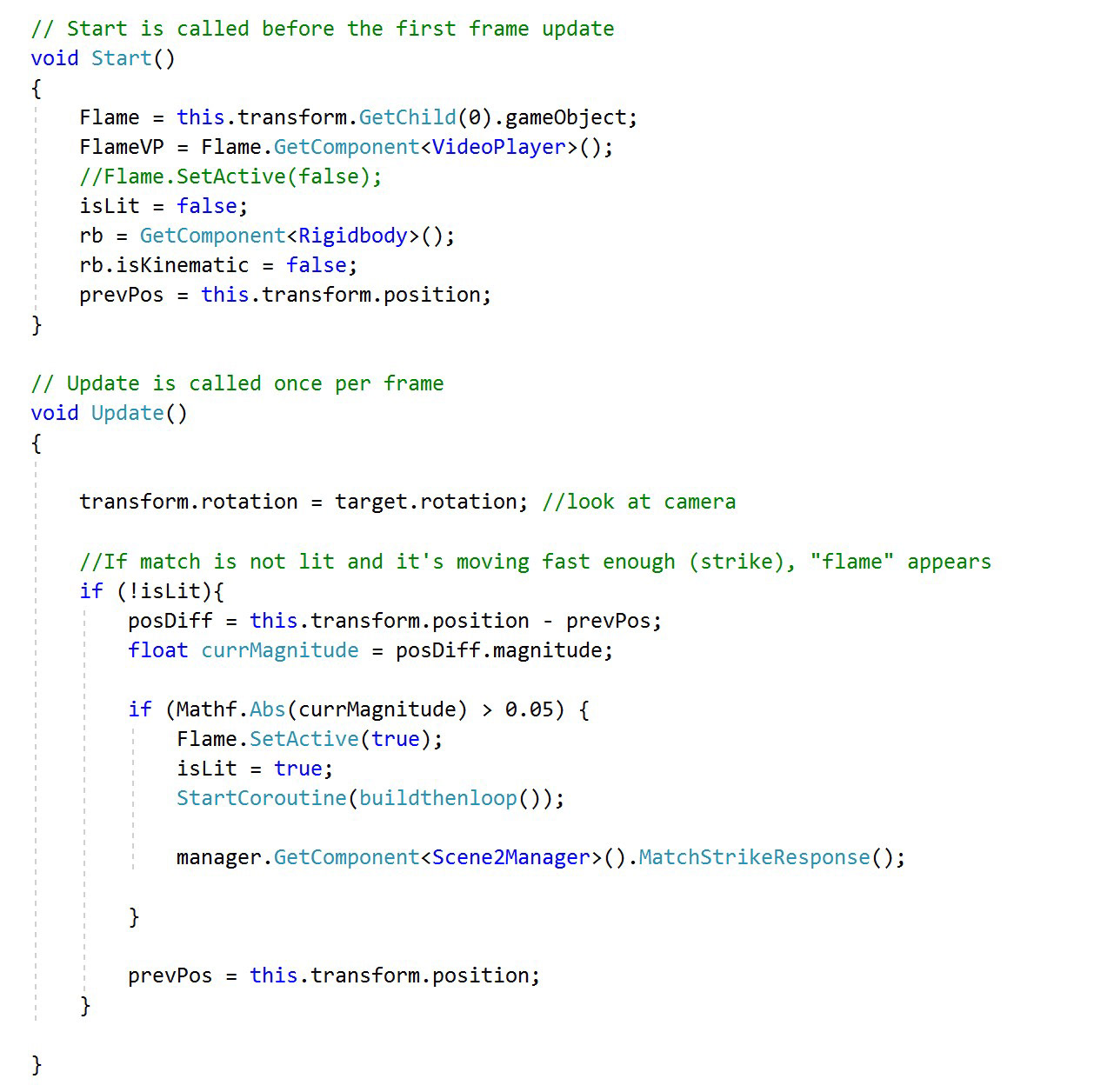

In Unity, each match has several properties: a flame gameobject parented to the tip of the match which is initially inactive, an Interactable_Grabbable script which makes it possible to interact with the match using controllers, and a Match script which controls the video playback and visibility of its flame.

INTERACTIVITY AND PHYSICS

In my opinion, the most exciting part of this scene is the interactivity. The user can grab each match with their controllers. Once grabbed, the match needs to float towards the user. Then, when the user swings their controller fast enough, the match needs to activate its (disabled on entry) flame gameobject, "striking". Then, when the user lifts the match up, it will activate the next video. Finally, the match will fade away at the end of the cursive video, destroying itself.

Upon being grabbed, the match needs to give up its own physics, so that it won't fall away from the controller as soon as the user stops actively clutching it. Specifically, it needs to be a kinematic object parented to the controller. However, if it's going to collide successfully with the video player plane to awaken, it needs to become a non-kinematic rigidbody again. This was challenging to resolve, but I eventually got it working by wrapping the match in an empty game object which is kinematic until a specific moment.

To do all this, I implemented David Lobser's custom VR interaction system.

FLAMES

Initially, Emily hoped to create the flames for the matches as animated 3D models in Blender. She created a great workflow with the Grease Pencil tool, and recorded this flame prototype (note: this is not a 2D render, but a 3D anim!)

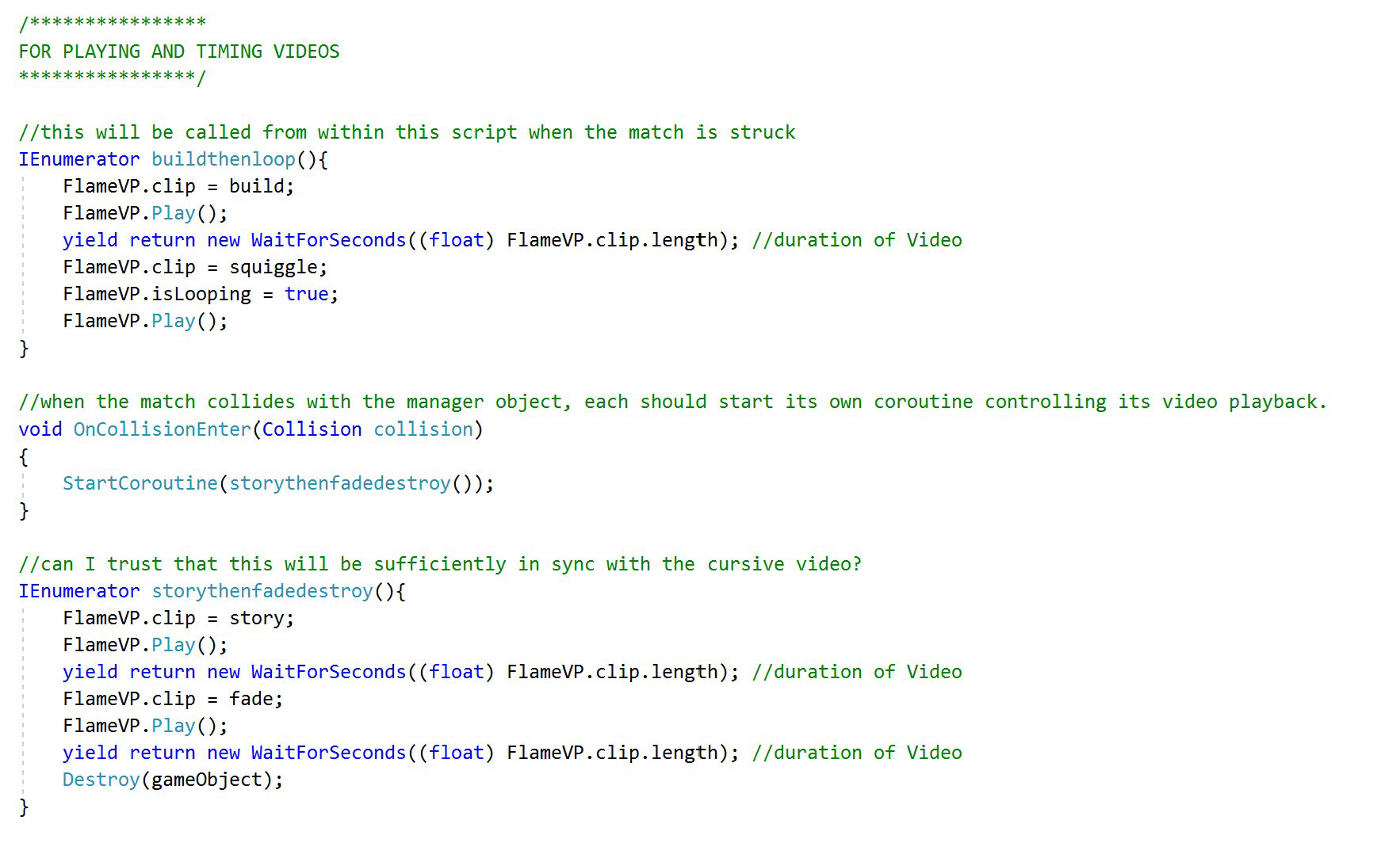

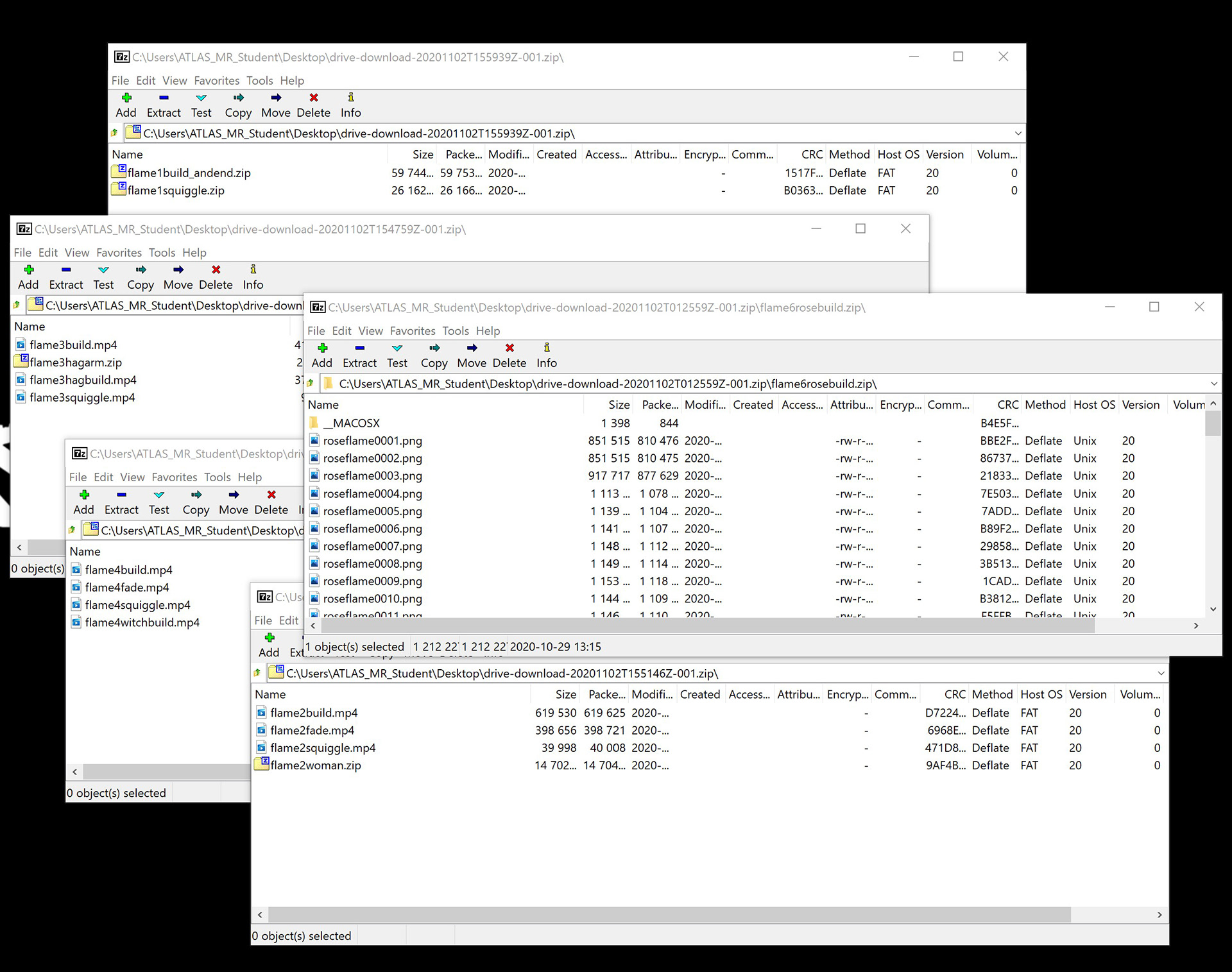

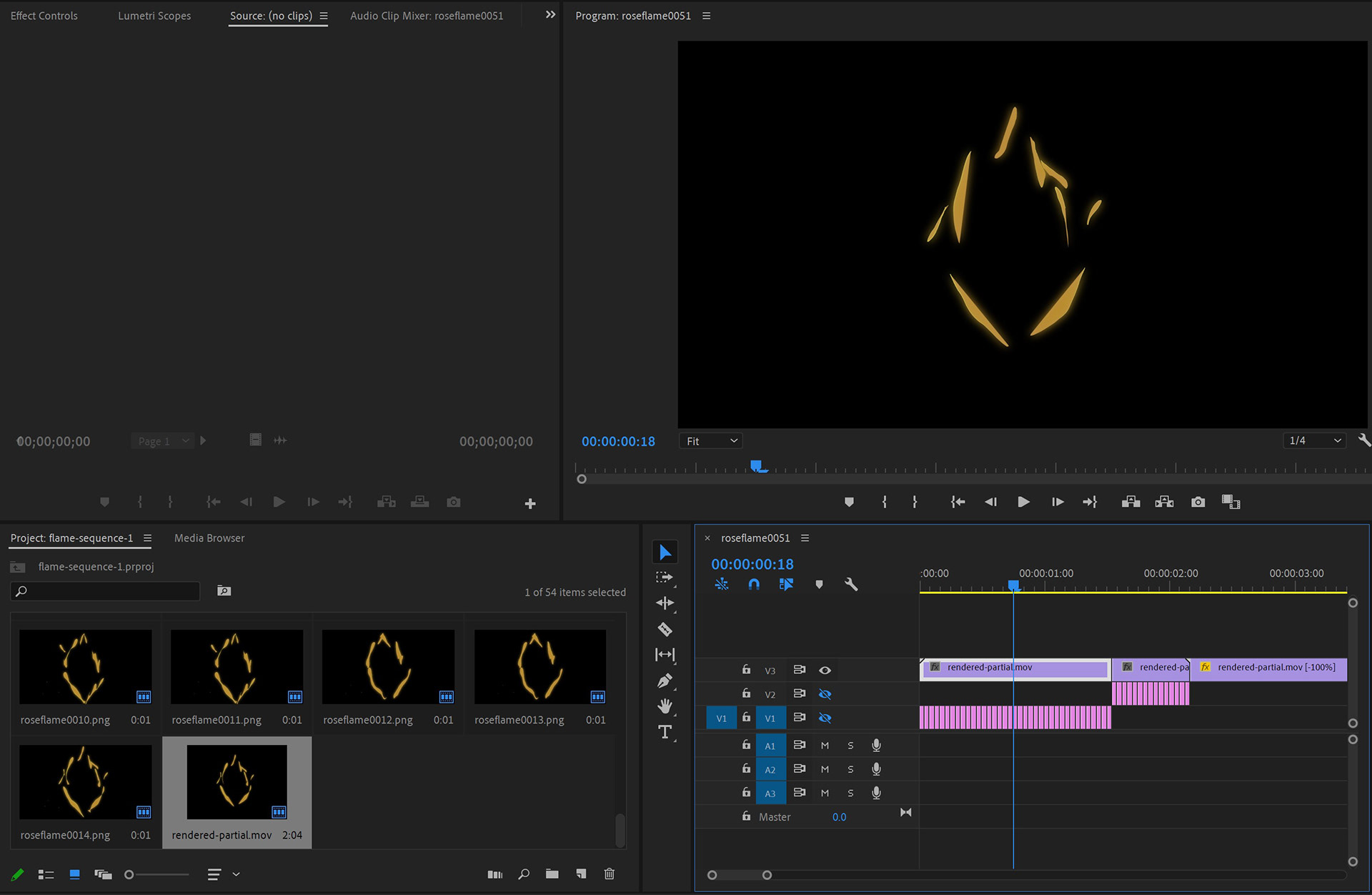

However, after doing some strenuous googling and completely exhausting every possible approach, we realized that it wasn't feasible to import these Grease Pencil sketches as 3D animations into Unity. We had to render the individual Grease Pencil frames out as 2D images, and use them as rapidly swapping materials for a plane. I tried this out, and some of the sequences had so many frames that it wasn't feasible to take this approach. Instead, I imported all the frames into Premiere Pro and rendered them out as multiple video sequences for each flame: a flame build, a flame maintenance, an animated motif build and maintenance, and a flame fade. Emily created beautiful unique animations for each of these sequences for each match, so properly exporting those animations and synching them up with our soundtrack was a nontrivial task. I also needed to write a few more coroutines to control switching out those video clips at the right times.

Rendering those animations with the correct transparency was another surprisingly difficult undertaking. I think it's very stylistically important that our flames have organic shapes, and don't just look like rectangular popsicles. In order to do this, the flame videos need to be rendered on a transparent background, and the alpha channel of the flames needs to control the transparency of the plane meshes itself. This is a two-step process: first, the video itself needs to be exported as a QuickTime video with an encoded alpha channel, and secondly, the planes need to have custom shaders applied. With some help from David, I learned how to write this shader and then used it for scene 9 as well.

CURSIVE VIDEOS + VOICEOVERS

It makes the most sense, once again, to implement these animations as videos. Handwriting with a stylus in Procreate can be recorded as a timelapse, and easily edited in AfterEffects to sync it up with the voiceover.

In Unity, I decided to make a single plane to play all the videos, rather than instantiating a new plane on every cycle. This plane is a convenient location to attach the scene manager script. On every cycle, its renderer and collider are strategically enabled and disabled. When the match interacts with the collider, the renderer turns on, and the plane's video player automatically begins the next cursive writing video. Rather than sync up the voiceover and video in Unity, I opted to export the voiceovers as audio for the videos and play the audio from the video player component directly through the Rift headphones. The video below features a (horribly synced) cursive video demo with audio playing directly through the headphones, as well as looping music on either end.

LOOPING MUSIC

It turns out, very sadly, that one cannot just tell Unity "loop seconds 5-12 of this track until I tell you so!" Instead, those seven seconds need to be imported as their own clip, and then playback can be controlled easily from a script. Xavier taught me how to select effective cut points for an audio clip so that it will loop seamlessly.

LIGHTING

A few little finishing touches to compensate for the fact that we're using 2D elements in VR rather than 3D, which is generally a no-no:

I noticed that all of these planes looked awful when I was looking at them at an angle from the headset, so I added code to the flames and the video player that makes them always turn to face the camera (which in VR should always be positioned at the center of the user's headset.)

For lighting, I attached a directional light which is always at the same position and rotation as the camera. Therefore, there is always a bright spotlight on whatever the user's looking at. To compensate for that, I went through and disabled specular highlights and shadows on all of the planes.